How might we better teach musical rhythm by taking advantage of spatial and tactile feedback?

- Timeline: 2.5 days

- Team: MIT Reality Hack team of 5

- Role: Interaction Design, coding/modeling of the visible UI, Audio Design

- Notable Tools: Unity, C#, Ableton Live

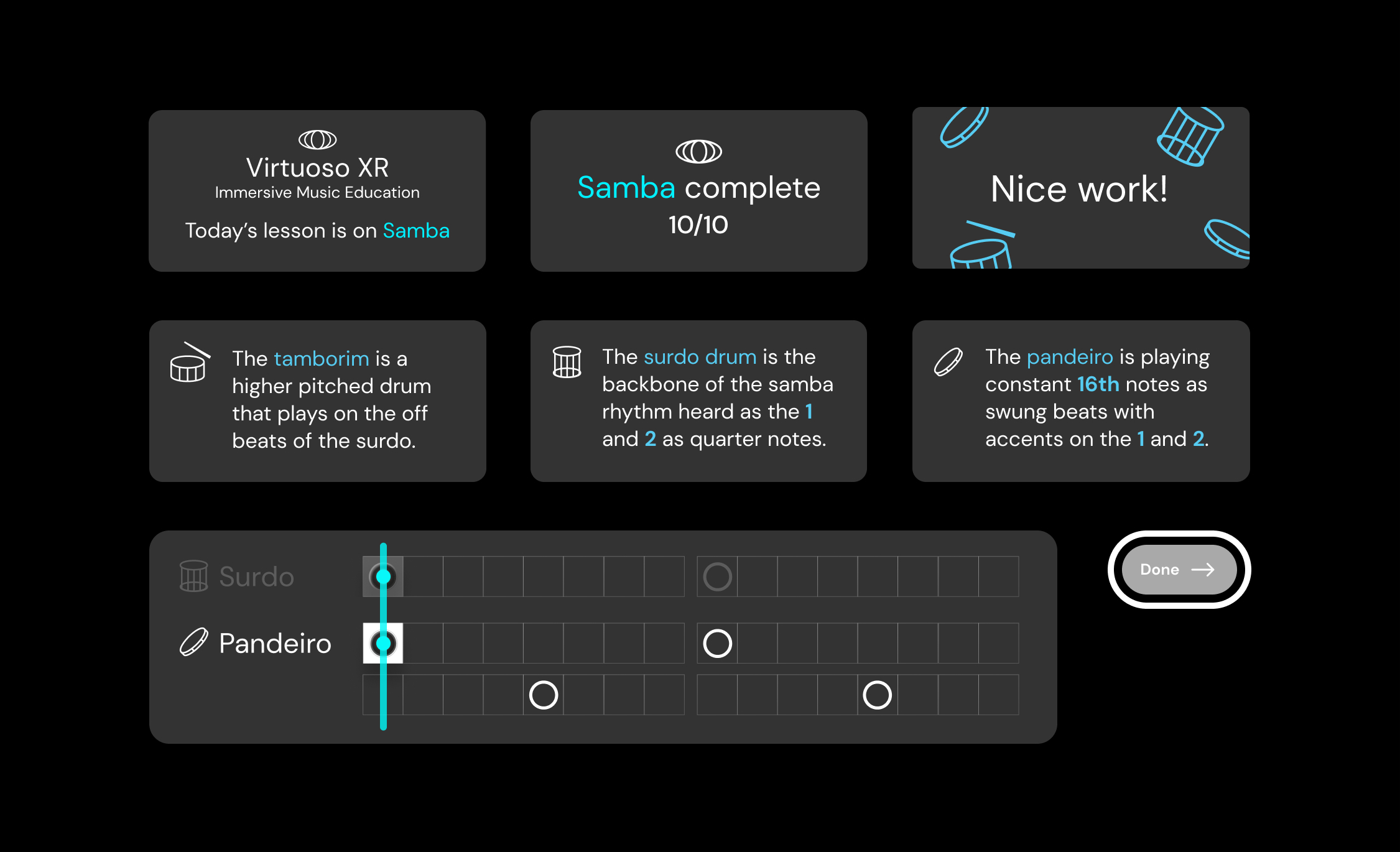

Virtuoso XR is an early music education concept built during the MIT Reality Hack that turns any physical surface into a magical tactile instrument.

With the exception of musical instruction, people often learn instruments by stumbling through video tutorials online. While they’re widely available, they have significant limitations in how they can teach. There’s a large jump between a passively watched, flat video and the kinesthetic process of learning musical coordination, posture, and dynamics on an instrument.

Our group aimed to bridge the gap by teaching rhythm through a mix of tactile and visual feedback. For the prototype, we chose Samba drums as a foundational style because of its 2/4 time signature and diversity of instruments (the surdo, tamborim and pandeiro). More complex topics like polyrhythm can also be taught by breaking things down into manageable layers and combining them later.

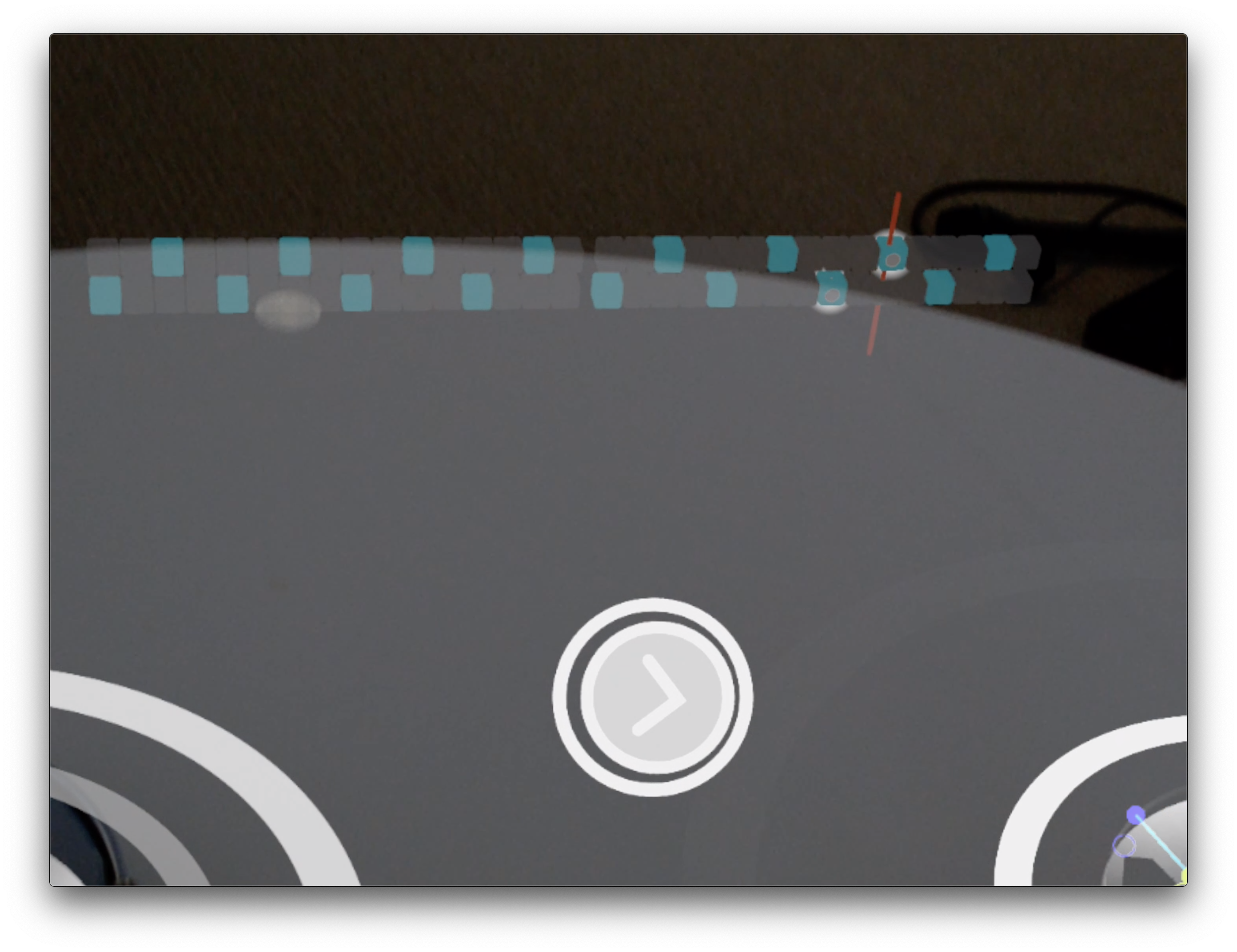

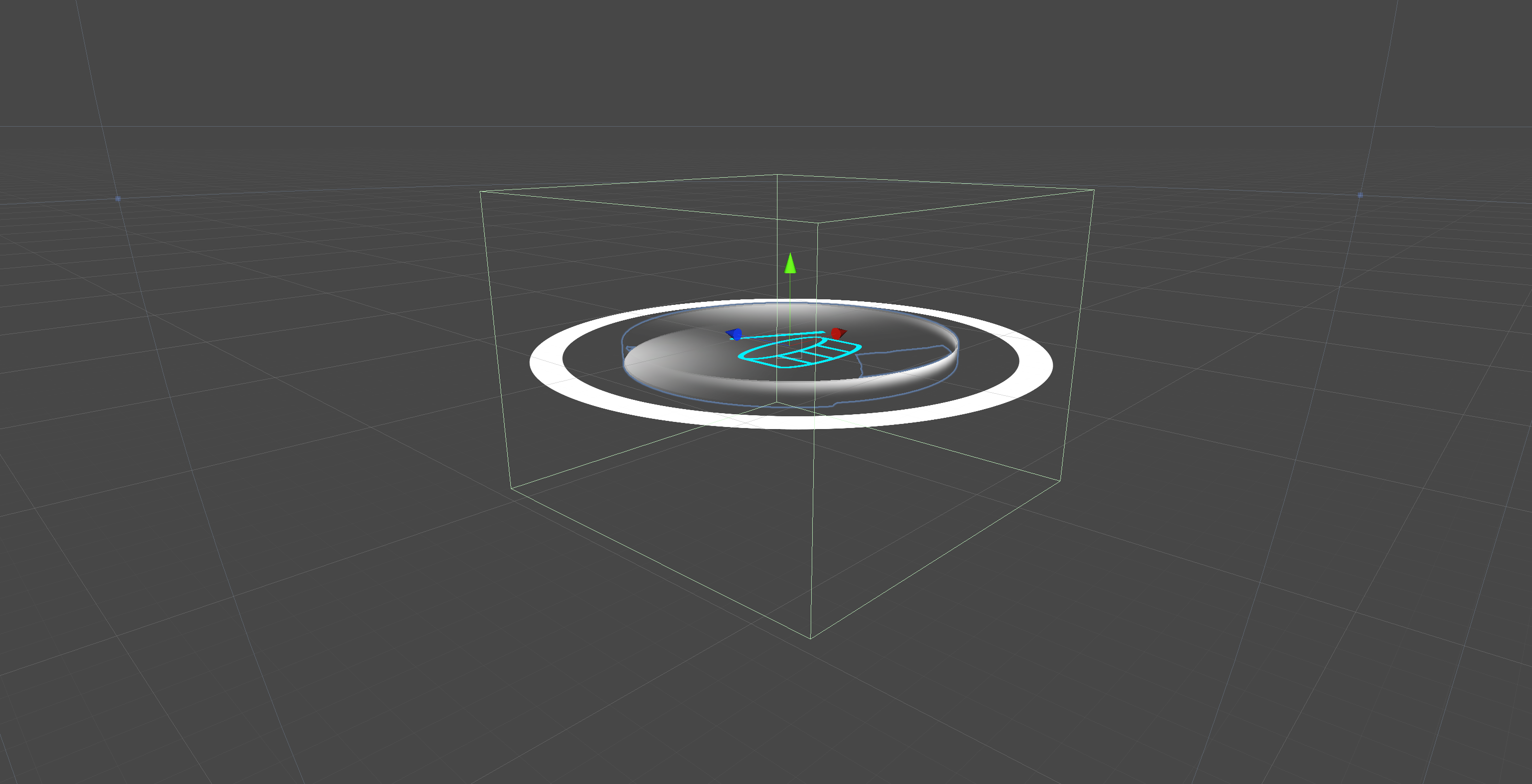

Virtuoso XR creates drum targets aligned with a real tabletop and provides cues for players to hit targets in time with an additive series of samba rhythms (imported through audio and MIDI tracks).

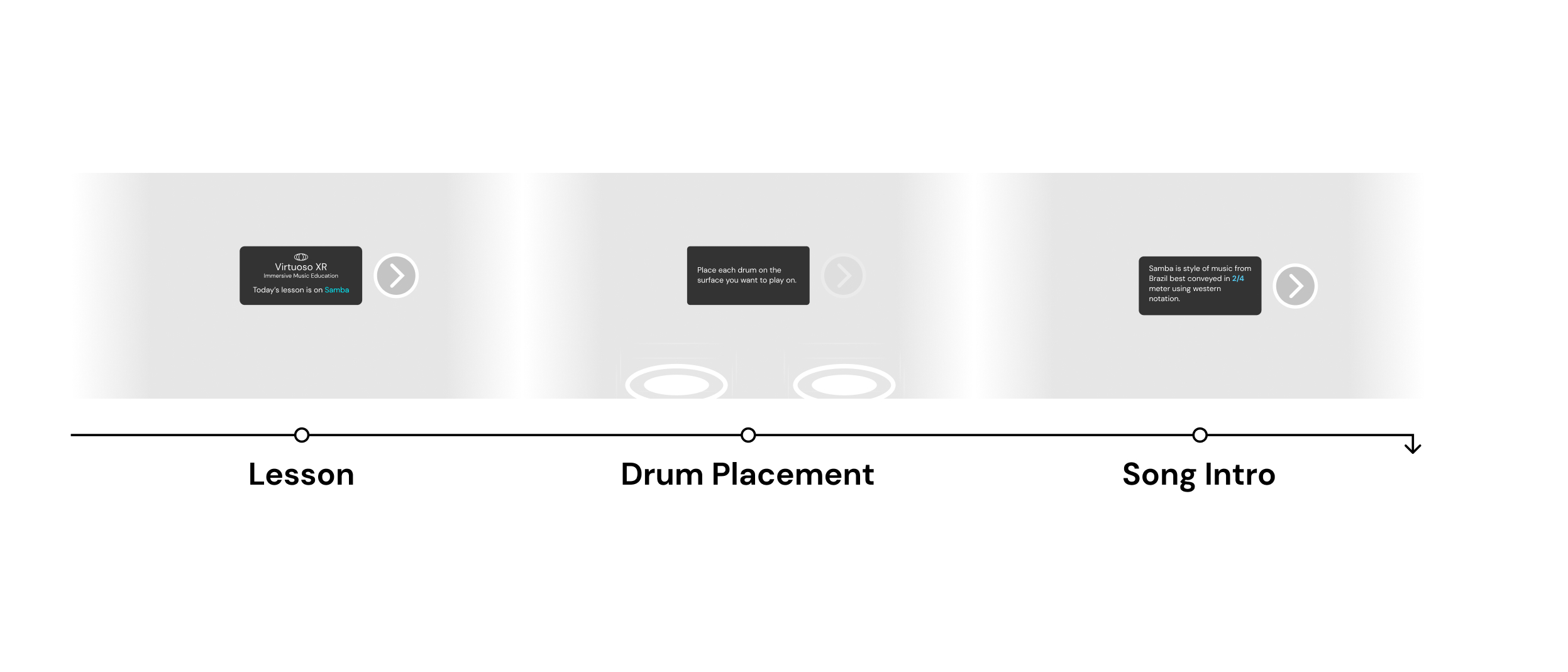

Working asynchronously between coding and testing, I worked with the team lead to define the minimum viable application loop. The first half consisted of an intro and drum placement component.

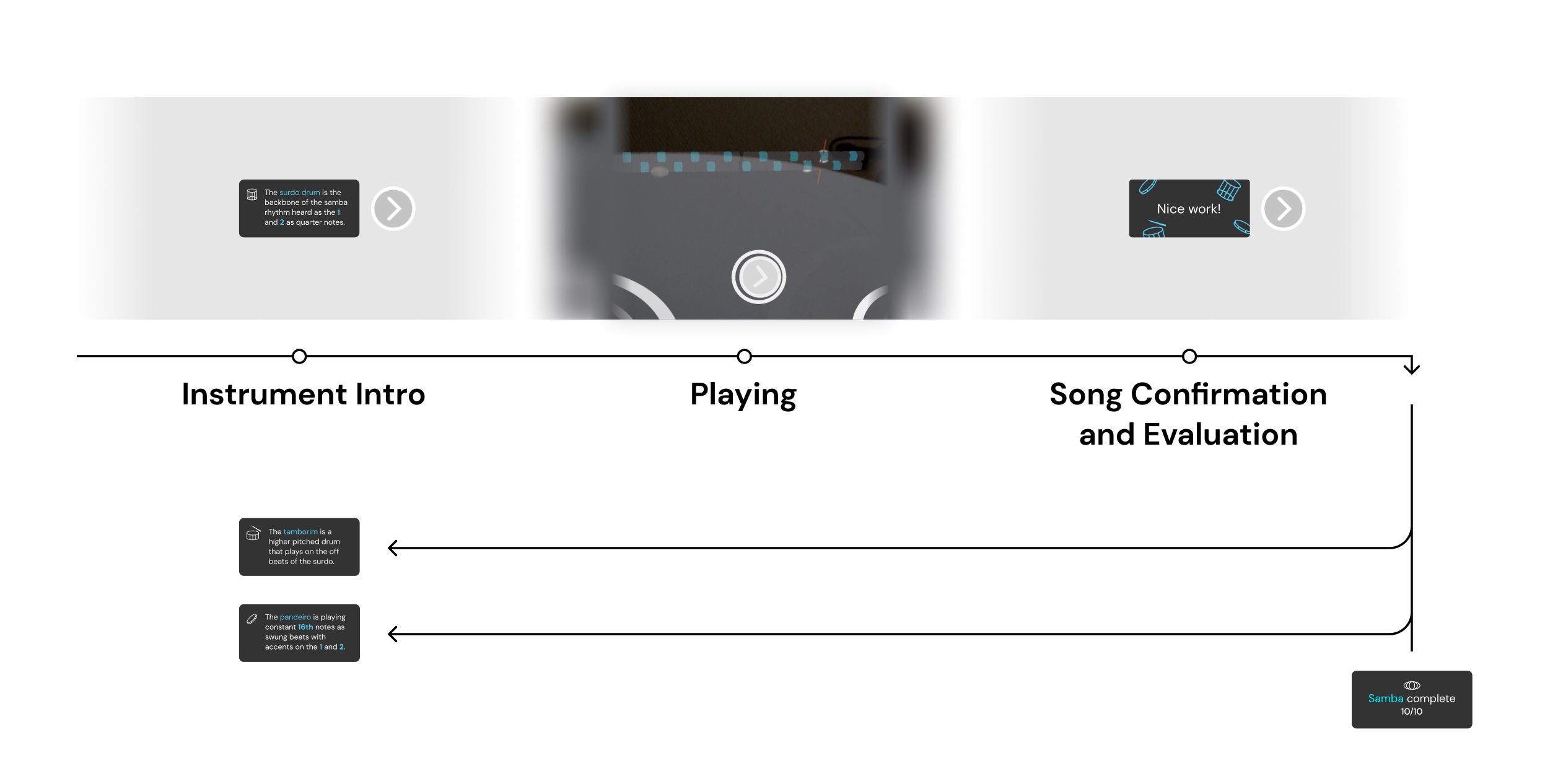

The second half consisted of instrument education, the core drumming experience and evaluation.

While converging on an experience, we were met with technical and design challenges.

The Beaten Path

In order to feel like a responsive instrument we needed to ensure our drum overlays were at the same level as the physical surface. Manual placement could get us partially there, but we needed precise alignment.

Time and technical constraints prevented us from intuitively implementing plane snapping. The first placed drum would be the first drum to show up in the subsequent lesson – likely the learner’s dominant hand. We also considered using a drum hit as the “next” interaction to educate people about the drum behavior.

Our stop gap to let people place drums based on their head position. Given more time, we would have built a system to ensure colliders on the student’s hand and drums felt appropriately spaced. This was a key tradeoff between getting early enough signal for a successfully timed hit and encouraging actual contact with the physical surface.

To alleviate this, we implemented a “within range” state that partially faded the drums out whenever the hand was within range of a hit.

Hit timing and feedback

We learned quickly while building the core drumming experience and leaning on each of our team member’s experiences. I focused on coding the visual and auditory feedback for hits/misses which the engineers hooked into. We generated the track timing with a MIDI analysis library file.

For the prototype we made three progressively more challenging layers of drums:

- Surdo – Whole beats on one drum

- Tamborim – Quarter beats on two drums

- Pandeiro – Off beats on one drum

Additionally we designed a bar inspired by the Time Unit Box System to help people visualize how each of the beats contributed to the groove.

We also spent time on usability, reducing the array of interactive elements to keep things focused, within line of sight and out of the way of each other.

Learnings

The concept of playing on real instruments resonated with a wide variety of people and we learned a lot through testing.

As expected, drum placement and calibration was a challenge for people – often times the drums wouldn’t be positioned right at the level of the surface, causing confusion. Latency was more of an issue for faster beats. In some experiments we let folks play on an actual drum which made it’s own sound. This decrease the tolerence for visual delay since the auditory feedback would come from the instrument. Given more time we would have developed ways to predict hits and tighten the digital-tangible feedback loop.

Button feedback wasn’t incredibly responsive and required folks to push and pull backward. Like the drums, interactions broke down if the button wasn’t the right distance to the user – often the case for children who tried Virtuoso. The prototype would have benefited from a deeper understanding and support of musical motion – things like expressiveness, swing and aftertouch. Ideally this would be to heuristically determined ahead of time through subsequent interactions.

Extending the tool

There are plans to extend and polish Virtuoso XR – including the addition of instrument lessons, real time and evaluative feedback on performance, a stronger educational lifecycle, and support for less cost prohibitive devices that could be deployed in schools.

Virtuoso XR was presented as a demo at the MIT Reality Hack.